这篇教程keras模型部署 flask & tfserving写得很实用,希望能帮到您。

keras模型部署 flask & tfserving

keras训练后的模型可以用两种方法实现。

- 利用keras的save方法保存模型成h5格式。利用python web框架加载h5模型并利用api请求对外提供http请求

- 生成pb格式,利用tfserving加载pb模型。提供http请求。

keras模型训练之后保存成h5格式部署。模型是一个文本分类模型

1.模型保存

model = Sequential()

model.add ....

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['acc'])

print(model.summary())

model.fit(x_train, y_train, validation_split=0.25, epochs=15, batch_size=1024)

model.save_weights('build/mini_test_weights.h5')

model.save('build/mini_test_model.h5')

with open('build/mini_test_model.json', 'w') as outfile:

outfile.write(model.to_json())

2.flask搭建加载h5模型

from flask import Flask

from flask import request

from keras.preprocessing import sequence

from keras.preprocessing.text import Tokenizer

from keras.models import load_model

import json

app = Flask(__name__)

model = load_model('D:\\mini_test_model.h5')

tokenizer = Tokenizer(filters='\t\n', char_level=True)

word_dict_file = 'D:\\dictionary_mini.json'

with open(word_dict_file, 'r') as outfile:

tokenizer.word_index = json.load(outfile)

def get_sequence(text):

x_token = tokenizer.texts_to_sequences(text)

x_processed = sequence.pad_sequences(x_token, maxlen=100, value=0)

return x_processed

@app.route('/')

def hello_world():

return 'Hello World!'

@app.route("/predict", methods=["GET","POST"])

def predict():

data = request.get_json()

if 'values' not in data:

return {'result': 'no input'}

arr = data['values']

res = model.predict(get_sequence(arr))

return {'result':res.tolist()}

if __name__ == '__main__':

app.run()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

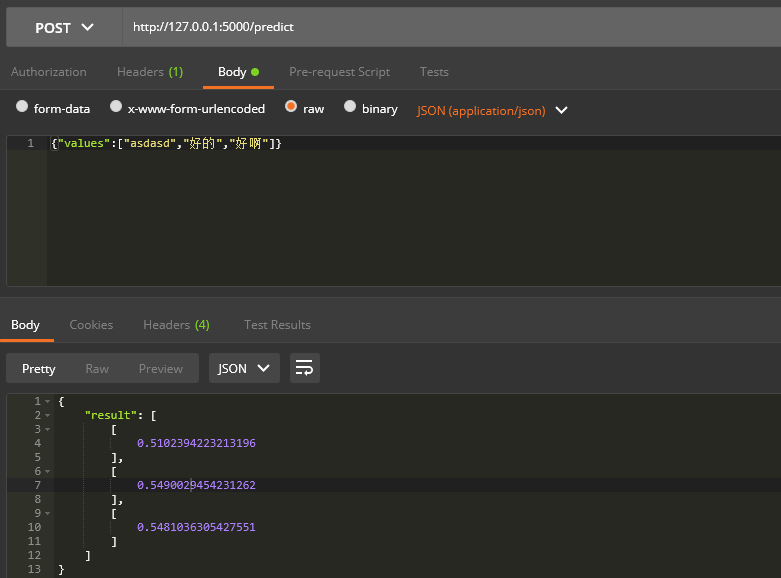

使用postman请求示例:

3.使用tf servering 加载

keras模型保存成pb模型:

import shutil

import tensorflow as tf

tf.keras.backend.clear_session()

tf.keras.backend.set_learning_phase(0)

model = tf.keras.models.load_model('./mini_test_model.h5')

if os.path.exists('./model/1'):

shutil.rmtree('./model/1')

export_path = './model/1'

with tf.keras.backend.get_session() as sess:

tf.saved_model.simple_save(

sess,

export_path,

inputs={'inputs': model.input},

outputs={t.name:t for t in model.outputs})

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

如果有自定义的方法 必须f1 score等函数,

例如模型compile时候:model.compile(loss=‘binary_crossentropy’, optimizer=‘adam’, metrics=[‘acc’,f1_m,precision_m, recall_m])

那么在加载的时候需要指定custom_objects,如果没有自定义方法可以忽略:

from keras.models import Sequential, load_model

model = load_model('build/category.h5', custom_objects={'f1_m':f1_m,'precision_m':precision_m,'recall_m':recall_m})

model.load_weights('build/category-weight.h5')

model.compile(loss = 'binary_crossentropy', optimizer = 'adam', metrics=['acc',f1_m,precision_m, recall_m])

生成pb模型后,开始搭建tfserving服务(使用docker)。

-

首先docker pull tensorflow/serving 拉取docker镜像。如果支持GPU 可以使用gpu版本docker pull tensorflow/serving:latest-gpu

-

执行指令

docker run -p 8501:8501 -v <模型路径>:/models/<模型名> -e MODEL_NAME=<模型名> -t tensorflow/serving &

docker run -p 8501:8501 -v /mnt/build/model/:/models/mini -e MODEL_NAME=mini -t tensorflow/serving &

docker ps 查看运行中的镜像

docker stop停止某个镜像

-

测试

curl -d '{"instances": [{"inputs":[0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1]}]}' -X POST "http://localhost:8501/v1/models/mini:predict"

返回列表

flask调用h5 |