|

简述《Zabbix对Kafka topic积压数据监控》一文的目的是通过Zabbix自动发现实现对多个消费者组的Topic及Partition的Lag进行监控。因在实际监控中发现有问题,为给感兴趣的读者不留坑,特通过此文对监控进行优化调整。

分区自动发现# 未优化前的计算方式:# 自动发现配置文件vim consumer-groups.conf#按消费者组(Group)|Topic格式,写入自动发现配置文件test-group|test# 执行脚本自动发现指定消费者和topic的分区bash consumer-groups.sh discovery{ "data": [ { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"0" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"1" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"3" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"2" } ]}经过上线验证,当自动发现配置文件只有一个test-group|test是没有问题的,但当我们按需求再接入test-group|test1 (即test-group消费者组的第二个Topic)时,自动发现的结果如下: # 未优化前的计算方式:vim consumer-groups.conf#按消费者组(Group)|Topic格式,写入自动发现配置文件test-group|testtest-group|test1# 执行脚本自动发现指定消费者和topic的分区bash consumer-groups.sh discovery{ "data": [ { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"0" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"1" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"3" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"2" } { "{#GROUP}":"test-group", "{#TOPICP}":"test1", "{#PARTITION}":"0" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test2", "{#PARTITION}":"1" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test3", "{#PARTITION}":"2" } ]}了解Zabbix自动发现格式的同学会发现,每个Topic的Partition会出现',',这种格式是不符合规范,这就是导致我们的监控项会出现问题,因此我们需要进一步修改脚本。 经修改后,最终效果应该如下: # 优化后的计算方式:vim consumer-groups.conf#按消费者组(Group)|Topic格式,写入自动发现配置文件test-group|testtest-group|test1# 执行脚本自动发现指定消费者和topic的分区bash consumer-groups.sh discovery{ "data": [ { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"0" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"1" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"3" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test", "{#PARTITION}":"2" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test1", "{#PARTITION}":"0" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test1", "{#PARTITION}":"1" }, { "{#GROUP}":"test-group", "{#TOPICP}":"test1", "{#PARTITION}":"2" } ]}

获取监控项“test-group/test/分区X”的Lag经过自动发现后的数据,我们可以进一步获取不同分区的lag # 优化后的计算方式:# test-group test分区0 lagbash consumer-groups.sh lag test-group test 0# test-group test分区1 lagbash consumer-groups.sh lag test-group test 1# test-group test1分区0 lagbash consumer-groups.sh lag test-group test1 0 通过命令可以看到,我们的参数通过消费者组、Topic、Partition来获取最终的lag值,如果不加消费者区分,那么无法区分不同消费者组和不同Topic相应的lag结果: # 未优化前的计算方式:# 获取分区0 lagbash consumer-groups.sh lag 0# 获取分区1 lagbash consumer-groups.sh lag 1# 获取分区2 lagbash consumer-groups.sh lag 2# 获取分区3 lagbash consumer-groups.sh lag 3

最终优化后脚本# 自动发现配置文件vim consumer-groups.conf#按消费者组(Group)|Topic格式,写入自动发现配置文件test-group|testtest-group|test1# 自动发现、lag计算脚本vim consumer-groups.sh#!/bin/bash##comment: 根据消费者组监控topic lag,进行监控告警#配置文件说明#消费者组|Topic#test-group|test#获取topic 信息cal_topic() { if [ $# -ne 2 ]; then echo "parameter num error, 读取topic信息失败" exit 1 else /usr/local/kafka/bin/./kafka-consumer-groups.sh --bootstrap-server 192.168.3.55:9092 --describe --group $1 |grep -w $2|grep -v none fi}#topic+分区自动发现topic_discovery() { printf "{/n" printf "/t/"data/": [/n" m=0 num=`cat /etc/zabbix/monitor_scripts/consumer-groups.conf|wc -l` for line in `cat /etc/zabbix/monitor_scripts/consumer-groups.conf` do m=`expr $m + 1` group=`echo ${line} | awk -F'|' '{print $1}'` topic=`echo ${line} | awk -F'|' '{print $2}'` cal_topic $group $topic > /tmp/consumer-group-tmp count=`cat /tmp/consumer-group-tmp|wc -l` n=0 while read line do n=`expr $n + 1` #判断最后一行 if [ $n -eq $count ] && [ $m -eq $num ]; then topicp=`echo $line | awk '{print $1}'` partition=`echo $line | awk '{print $2}'` printf "/t/t{ /"{#GROUP}/":/"${group}/", /"{#TOPICP}/":/"${topicp}/", /"{#PARTITION}/":/"${partition}/" }/n" else topicp=`echo $line | awk '{print $1}'` partition=`echo $line | awk '{print $2}'` printf "/t/t{ /"{#GROUP}/":/"${group}/", /"{#TOPICP}/":/"${topicp}/", /"{#PARTITION}/":/"${partition}/" },/n" fi done < /tmp/consumer-group-tmp done printf "/t]/n" printf "}/n"}if [ $1 == "discovery" ]; then topic_discoveryelif [ $1 == "lag" ];then cal_topic $2 $3 > /tmp/consumer-group cat /tmp/consumer-group |awk -v t=$3 -v p=$4 '{if($1==t && $2==p ){print $5}}'else echo "Usage: /data/scripts/consumer-group.sh discovery | lag"fi# 手动运行## 自动发现bash consumer-groups.sh discovery## test-group test分区0 lagbash consumer-groups.sh lag test-group test 0

接入Zabbix

1.Zabbix配置文件vim userparameter_kafka.confUserParameter=topic_discovery,bash /data/scripts/consumer-groups.sh discoveryUserParameter=topic_log[*],bash /data/scripts/consumer-groups.sh lag "$1" "$2" "$3"

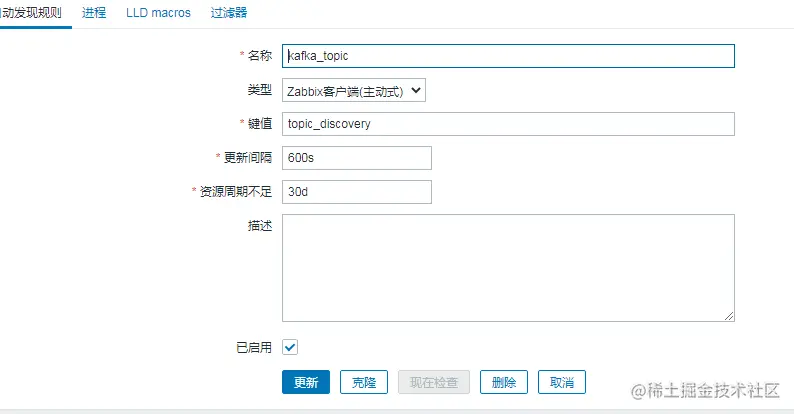

2.Zabbix自动发现

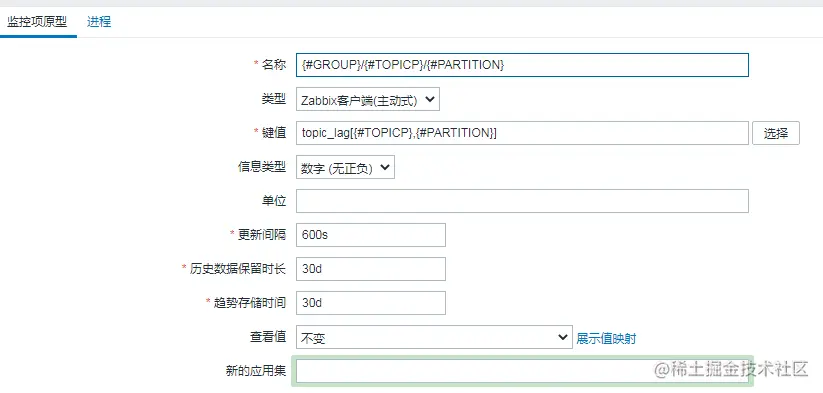

3.监控项配置

4.告警信息告警主机:Kafka_192.168.3.55主机IP:192.168.3.55主机组:Kafka告警时间:2022.03.21 00:23:10告警等级:Average告警信息:test-group/test/分区1:数据积压100告警项目:topic_lag[test-group,test,1]问题详情:test-group/test/1: 62

下载地址:

Docker

Zabbix对Kafka |